The EEG Neurocontroller

Affective computing, a subcategory of artificial intelligence, detects, processes, interprets, and simulates human emotions. With the continuous development of portable, non-invasive human sensory technologies, such as brain-computer interfaces (BCIs), emotion recognition has garnered the interest of scientists from diverse fields. Facial expressions, speech, behavior (gestures/postures), and physiological signals can be used to identify human emotions. However, the first three may not be effective as individuals may consciously or unconsciously conceal their true emotions (known as social masking). Physiological signals can provide more accurate and objective recognition of emotions. Electroencephalogram (EEG) signals respond in real-time and are more sensitive to changes in affective states than peripheral neurophysiological signals. Therefore, EEG signals can reveal important features of emotional states.

Recently, several EEG-based emotion recognition methods have been developed. Additionally, rapid progress in machine learning and deep learning has enabled machines or computers to understand, recognize, and analyze emotions. This study examines emotion recognition methods based on multi-channel EEG-based IMC and provides an overview of what has been achieved in this field. It also provides an overview of the datasets and methods used to identify emotional states. In accordance with the usual path of emotional recognition, we consider the extraction of various EEG features, feature selection/reduction, machine learning methods (for example, k-nearest neighbor), support vector machine, decision tree, artificial neural network, random forest and naive Bayes method) deep learning methods (for example, convolutional and recurrent neural networks with long-term short-term memory).

In addition, we discuss the EEG rhythms that are closely related to emotions, as well as the relationship between specific brain regions and emotions. We also discuss several studies on human emotion recognition published between 2015 and 2021, which use EEG data and compare various machine learning and deep learning algorithms. Finally, this review proposes several challenges and future research directions in the field of human emotion recognition and classification using EEG. which use EEG data and compare different machine learning and deep learning algorithms. Finally, this review suggests several tasks and directions for future research in the field of recognition and classification of human emotional states using EEG, which use EEG data and compare different machine learning and deep learning algorithms. Finally, this review suggests several tasks and directions for future research in the field of recognition and classification of human emotional states using EEG.

A brain-computer interface (BCI) is a computer communication system that analyzes signals generated by the neural activity of the central nervous system. It is a highly effective communication technology that does not rely on neuromuscular or muscular pathways for communication, commands, and therefore actions. By thinking with intention, the subject generates brain signals that are converted into commands for the output device. As a result, a new output channel is available to the brain. The main goal of BCI is to detect and evaluate the features of signals in the user's brain that indicate the user's intention. These features are then transmitted to an external device that is used to fulfill the user's desired intention. As shown in Figure 1, to achieve this goal, a BCI-based system consists of four sequential components: signal acquisition, pre-processing, conversion, and feedback or device output.

Signal acquisition, the first component of a BCI, is primarily responsible for receiving and recording signals generated by neural activity, as well as sending this data to a pre-processing component to enhance the signal and reduce noise. Methods for acquiring brain signals can be divided into invasive and non-invasive approaches. Invasive methods involve the surgical placement of electrodes either inside or on the surface of the user's brain.

Brain activity is recorded using external sensors using non-invasive technology. After preprocessing, various important signal symbols (such as signal characteristics related to the user's intention) are extracted from the irrelevant data and presented in a way that can be converted into output instructions. This component creates selective features for the improved signal, reduces the size of the data that can be sent to the conversion algorithm, and then converts the symbols into the corresponding instructions required by the external device to complete the task (such as instructions that fulfill the user's intention). The output device is controlled and managed by the instructions received by the translation algorithm.

It helps users to achieve their goals such as selecting an alphabet, controlling a mouse, controlling a wheelchair, moving a robotic arm, etc. and moving a paralyzed limb using a neuroprosthesis. Computers are currently the most commonly used output device for communication.

Electroencephalography (EEG) using external inserted electrodes can measure neural activity useful for BCI and is safe, inexpensive, non-invasive, easy to use, portable, and supports high temporal resolution. Since EEG can be used in BCI systems in various fields by the user without the assistance of a technician or operator, it has become popular among end users. BCI has contributed to various fields, including education, medicine, psychology, and military applications.

They are mainly used in the field of affective computing and as a form of assistance for paralyzed people. Spelling systems, medical neuroergonomics, wheelchair control, virtual reality, robot control, mental workload monitoring, games, driver fatigue monitoring, environmental control, biometric systems, and emotion detection are some of the most significant achievements in EEG-based IMC.

Emotion recognition.

In recent years, due to the increasing availability of various electronic devices, people have been spending more time on social media, playing online video games, shopping online, and using other electronic products. However, most modern human-computer interaction (HCI) systems are not capable of processing and understanding emotional data and lack emotional intelligence.

They are not able to recognize human emotions and use emotional data to make decisions and take actions. In advanced intelligent human-computer interaction systems, solving the problem of lack of communication between humans and robots is crucial. Any human-computer interaction system that ignores human emotional states will not be able to respond adequately to these emotions. To solve this problem in human-computer interaction systems, machines must be able to understand and interpret human emotional states. Reliable, accurate.

Since human-computer interaction is studied in various disciplines, including computer science, human factors engineering, and cognitive science, the computer on which the intelligent hyperconvergent system runs must be adaptable. In order to generate appropriate responses, it is necessary to accurately understand human communication models. The ability of a computer to understand human emotions and behavior is a crucial component of its adaptability. Therefore, it is important to recognize the user's affective states in order to maximize and improve the performance of HCI systems.

In the HCI system, the interaction between the machine and the operator can be improved to make it more intelligent and user-friendly if the computer can accurately understand the emotional state of the human operator in real-time. This new field of research is called Affective Computing (AC). AC is a subfield of artificial intelligence that focuses on HCI through the detection of user actions. One of the key goals of the AC domain is to create ways for machines to interpret human emotions, which can improve their ability to communicate.

Behavior, speech, facial expressions, and physiological signals can be used to identify human emotions. The first three approaches are somewhat subjective. For example, subjects may intentionally conceal their true feelings, which can affect their performance. Physiological signal-based emotion identification is more reliable and objective.

BCI is a portable, non-invasive sensory technology that captures brain signals and uses them as input for systems that understand the correlation between emotions and EEG changes to humanize HCI. The central nervous system generates EEG signals that respond to emotional changes faster than other peripheral neural signals. Additionally, EEG signals have been demonstrated to provide important functions for emotion recognition.

A scientific perspective on emotions.

In the following sections, we will briefly discuss what an emotion is, models of emotion representation, and experiments that are triggered or caused by emotions.

What are emotions?

An emotion is a complex state that expresses human consciousness and is described as a reaction to environmental stimuli. Emotions are typically reactions to ideas, memories, or events that occur in our environment. This is important for decision-making and human interpersonal communication. People make decisions based on their emotional state, and negative emotions can lead to both psychological and physical difficulties. Unfavorable emotions can contribute to poor health, while positive emotions can lead to improved quality of life.

Models of emotions

Historically, psychologists have used two methods to characterize emotions: the discrete (basic) emotion model] and the measurement model. Multidimensional models classify emotions into dimensions or scales, while discrete emotion models include several basic emotions and include two categories of emotions (positive and negative). Several theorists have conducted experiments to define basic emotions and have proposed a number of categorized models. Darwin proposed a theory of emotions, which was later interpreted by Tomkins. Tomkins argued that discrete emotions include nine basic emotions: interest-arousal, surprise-fear, pleasure-joy, distress-suffering, disgust, fear-terror, anger-rage, contempt-disdain, and shame-humiliation. These nine basic emotions are believed to play an important role in optimal mental health.

Ekman's model is based on another widely accepted theory. According to Ekman, basic emotions should include the following characteristics: (1) emotions are instinctual; (2) different people experience the same emotions in the same situation; (3) different people express basic emotions in the same way; (4) the physiological patterns of different people are consistent when basic emotions are experienced. Ekman and his colleagues identified six basic emotions that can be recognized through facial expressions: sadness, surprise, happiness, disgust, fear, and anger. Other complex (non-basic) emotions, such as shyness, guilt, and contempt, can be generated by these six basic emotions. Many theorists and psychologists have included additional emotions in their sets of basic emotions that are not included in Ekman's six.

Some have divided emotions into tiny

groups, focusing on general feelings such as fear or anger (as negative emotions) and happiness or love (as positive emotions). Others have focused on finer nuances and divided emotions into larger groups. Table 1 shows some of the most basic models of emotions.

Emotions

Surprise, joy, interest, rage, disgust, fear,

longing, shame

Fear, sadness, happiness, anger, disgust, surprise

Fury and horror, anxiety, joy

Pain, pleasure

Fear, love, rage

Fear, grief, love, rage

Waiting, rage, fear, panic

Sadness, happiness

Anger, courage, disgust, despondency, despair, desire,

fear, hope, hatred, sadness, love

Happiness, sadness, fear, anger, disgust

Desire, interest, happiness, surprise, sadness, surprise

Anger, disgust, contempt,

Anger, fear, excitement, disgust

However, some theorists and researchers believe that the discrete model has limitations in terms of representing specific emotions in a broader range of affective states. In other words, everyday affective states are too complex to be well represented by a small number of discrete categories. As a result, a new method known as spatial emotion has been proposed. In this model, emotions are organized multidimensionally, with each dimension representing an emotional characteristic. Each emotion can be represented as a point in a multidimensional space. Instead of choosing discrete labels, a person can express their feelings on a range of continuous or discrete scales, such as attention-rejection or pleasant-unpleasant. Today, researchers have proposed many multidimensional methods for modeling emotions. (b) a continuous two-dimensional Wissell space with evaluation and activation as dimensions, and (c) a three-dimensional Schloberg emotion model that adds a dimension of attentional deviation to the two-dimensional model.

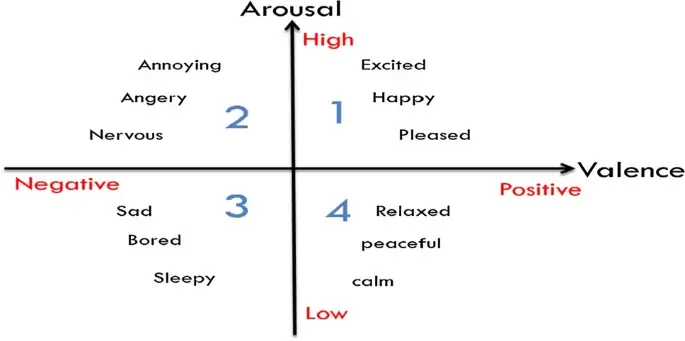

The two-dimensional Russell model of emotions is most often used. As shown in Figure 2, the vertical axis is a measurement of arousal (expressing the emotional intensity of an experience in the range from low to arousal), and the horizontal axis is a measurement of valence (showing the degree of cheerfulness or joy in the range from negative to arousal). to the positive). In the excitation-valence coordinate system, there are four categories of emotions. Negative emotions are represented on the left of the coordinate, while positive emotions are represented on the right. The valence axis represents both positive and negative emotions, while the excitation axis ranges from inactive to active emotions.

Figure 1 shows the first area, which includes positive valence high arousal emotions (HAPV), which range from pleasure to arousal. Area 2 includes high arousal negative valence emotions (HANV), which range from nervous to irritating. Area 3 includes low arousal negative valence emotions (LANV). The last area includes low arousal positive valence emotions (LAPV) (calmness for relaxation). As shown in Figure 1, the first two zones represent high-arousal emotions (active), while the last two zones represent low-arousal emotions (inactive).

Emotion detection models

The ability to evoke/detect the emotional state of the subject in certain appropriate ways, i.e., emotional arousal, is a crucial step in detecting emotions based on physiological signals. There are three main ways to evoke emotions. First, by evoking emotions by creating simulated scenarios. People have a habit of evoking unforgettable emotions in the past. Additionally, emotions can be evoked by prompting subjects to recall fragments of their past experiences that have a distinct emotional connotation. The problem with this approach is that it cannot guarantee that the subject will generate the appropriate emotion, and the time associated with the emotion is immeasurable.

Secondly, by evoking emotions by showing videos, music, photos, and other stimulating materials. This is a common way to evoke emotions, causing participants to generate emotional states and objectively label them. Finally, the subject must play a computer or video game. Computer games are not only physically beneficial, but also psychologically. Subjects simply listen to and observe the sounds of their environment while watching short films or clips. Subjects in computer games, on the other hand, do not just observe or watch the stimuli; they actually experiment with the scene firsthand. They adopt the role model of the game characters, and this has a similar effect on people's emotions.

Subjects simply listen to and observe environmental sounds while watching short films or clips. Subjects in computer games, on the other hand, do not simply observe or watch stimuli; they actually experiment with the scene firsthand. They adopt the role model of the game characters, and this has a similar effect on people's emotions. Subjects simply listen to and observe environmental sounds while watching short films or clips. Subjects in computer games, on the other hand, do not just observe or watch the stimuli; they actually experiment with the scene firsthand. They adopt the role model of the game characters, and this has a similar effect on people's emotions.

The most common resources for identifying emotions are the International Affective Digital Sounds (IADS) and the International Affective Picture System (IAPS). These datasets contain standardized emotional stimuli. As a result, they are valuable in experimental research. The IAPS consists of 1,200 photographs divided into 20 groups of 60 images. Each photograph is assigned a valence and arousal value. The latest edition of the IADS includes 167 digitally recorded natural sounds that are common in everyday life and are classified by importance, dominance, and arousal. Using a self-assessment system, participants labeled the data set.

The authors argue that the emotions elicited by visual or auditory stimuli are comparable. On the other hand, the results of affective labeling of multimedia cannot be generalized to everyday or more interactive situations. As a result, additional research using interactive emotional stimuli is welcome to ensure the generalization of BCI results. To our knowledge, only a few studies have used more interactive situations to generate emotions, such as when people played games or used flight simulators.

Motivations and main contributions

The aim of this review is to provide researchers with the opportunity to use machine learning techniques to improve the speed of accurate and fast recognition of human emotional states based on EEG BCI.

EEG signals in emotion recognition

We need to understand the sources of emotions in our body to teach a computer to understand and recognize them. Emotions can be expressed verbally, for example, using well-known words, or non-verbally, for example, through the tone of our voice, facial expressions, and physiological changes in our nervous system. Since facial expressions and voice can be faked or cannot be considered as the result of a specific emotion, they are not reliable predictors of emotions. Since the user cannot control physiological signals, they are more accurate. Physiological changes are the fundamental sources of emotions in our bodies.

Physiological changes can be divided into two categories: those that affect the central nervous system (CNS) and those that affect the peripheral nervous system (PNS). The spinal cord and the brain make up the CNS. The brain is the control center for everything that happens in our body, and changes in electrical activity are converted into various actions and emotions. An electroencephalogram (EEG) is a test that measures electrical changes in the brain. EEG is described as an alternating electrical activity recorded from the scalp surface using metal electrodes and a conductive medium.

EEG contains a wealth of useful information about many physiological states of the brain. It responds more quickly and sensitively to changes in affective states, making it a particularly valuable tool for understanding human emotions. The low-frequency region is more strongly associated with emotional EEG than the high-frequency region, and negative emotions are more common and intense than positive emotions. In the presence of joyful, sad, and frightening emotions, the average power of beta, alpha, and theta waves on the midline of the brain will vary dramatically, indicating that the midline power spectrum of the EEG is one of the most useful indicators for classifying mental disorders. emotions.

BCI-based EEG-based Emotion Recognition Methodology

The architecture of the EEG-based BCI system for emotion recognition is shown in Fig. 5 . The acquisition of EEG signal, pre-processing, feature extraction, feature selection, emotion classification, and performance evaluation are separate processes that will be discussed in the following sections.

EEG signal acquisition

Nowadays, EEG is a universally accepted standard method for measuring the electrical activity of the brain. Modern EEG equipment includes a set of electrodes, a data storage unit, an amplifier, and a display unit. There are invasive and non-invasive methods for acquiring EEG signals. In the invasive method, the signal-to-noise ratio and signal intensity are high. The electrodes must be surgically implanted into the skull cavity, and the electrodes penetrate the cerebral cortex, which makes the operation difficult. In the non-invasive approach, electrodes are attached to the subject's scalp. This approach is easy to use and is the most common method of data collection in modern non-invasive studies. EEG signals can be effectively obtained using low-cost wearable EEG headsets and helmets that place non-invasive electrodes across the scalp.

Research goals vary; therefore, in EEG experiments aimed at emotion recognition, the collected EEG signals vary, as do the number and placement of electrodes. The International 10-20 electrode placement system is used in most EEG emotion experiments. The number of electrodes ranges from six to 62. Based on Figure 6, it was found that the EEG electrodes associated with emotions were mainly distributed in the frontal lobe (red), parietal lobe (green), occipital lobe (blue), temporal lobe (yellow), and central region. (squares). The frontal polar, anterior frontal, frontal, anterior central, temporal, parietal, and occipital regions of the brain are abbreviated as FP, AF, F, FC, T, P, and O, respectively. The left hemisphere is denoted by an odd-numbered suffix, and the right hemisphere is denoted by an even-numbered suffix. These areas correspond exactly to the physiological basis of the origin of emotions.

Pre-processing of EEG signals involves cleaning and amplifying the signal. EEG signals are inherently weak and can be easily contaminated by noise from both internal and external sources. Noise can be generated by the electrodes or by the human body itself. The term "artifacts" refers to these types of noise. EEG electrodes can pick up unwanted electrical physiological signals, such as electromyograms (EMGs) from blinking and neck muscles, during the recording of the EEG signal. When the subject is moving, there are also concerns about motion artifacts caused by cable movement and electrode displacement. As a result, the preprocessing phase is crucial for reducing these artifacts in the raw EEG data, which can affect the post-processing classification.

To reduce the number of artifacts in the collected EEG signals, you can use frequency-domain filters to narrow the frequency band of the EEG being studied. High-pass filters, low-pass filters (also known as high- and low-frequency filters by electrical engineers), Butterworth filters, and notch filters are some of the most commonly used filters. Frequencies between 1 and 50-60 Hz are filtered using high-pass and low-pass filters. A Butterworth filter has a wide transition zone and a flat response in the stopband and passband. Reject filters are used to prevent the transmission of a specific frequency rather than a range of frequencies. A reject filter is used to remove the frequency of electrical networks, which typically ranges from 50 to 60 Hz, depending on the frequency of the standard electrical signal in a particular country. When using filters, it is important to use them carefully to avoid signal distortion.

Common EEG data preprocessing techniques that have been used in various studies include independent component analysis (ICA), principal component analysis (PCA), common average reference (CAR), and common spatial patterns (CSP). When using multi-channel recordings, PCA and ICA tools use blind source separation to remove noise from the original signals, making them useful for removing artifacts and reducing noise. The CSP method identifies spatial filters that can be used to identify signals that correlate with muscle movements. CAR is ideal for noise reduction.

EEG data on emotional and basic (non-emotional) states are included in the pre-processed EEG data for emotion detection. In addition, physiological signals show significant heterogeneity between individuals (i.e., variations from one person to another). Different emotions can be elicited at different times and/or in different contexts, even when the subject and the stimulus material are the same. As a result, among the preprocessing methods for reducing the influence of the preceding stimulus material on the subsequent emotional state, as well as the influence of individual variations in physiological signals, the features of the background EEG (before any type of emotional stimulation) were removed from the features of the EEG after emotional stimulation. Then, the remaining features were scaled to the interval .

Individual differences in subjective emotional responses to a similar stimulus pose a serious challenge in emotion recognition research. Consequently, most studies have a limited number of emotion classes. Many DEAP emotion recognition studies focus on binary classification problems (high or low arousal or positive or negative, and target emotional labels are usually determined using a simple hard threshold of subjects' subjective rating data.

Independent Component Analysis

Independent Component Analysis (ICA) is a statistical technique for finding linear projections of observed data that maximize mutual independence. When used for blind source separation (BSS), ICA seeks to recover independent sources from mixtures of these sources using multi-channel observations. When processing EEG signals, ICA separates the signals into actions that are independent of neurons and originate from different areas of the brain, and actions that are not related to independent sources (artifact components) and are associated with eye movements, blinking, the heart, muscles, and linear noise, which can be easily understood based on their spatio-temporal characteristics.

If you are interested in this neural network and it can help you solve your business and other technical problems, please send an application to info@ai4b.org

Reference: https://link.springer.com/article